Apparently Interzone Games are having some difficulty getting established in Western Australia.

Two days ago I attended I attended a cocktail function in order to hear a 'major announcement' from Interzone Games and the Western Australian Department of Industry & Resources (DOIR). The "Honorable Francis Logan MLA, Minister for Energy, Science and Innovation" was also coming along. Like the other attendees, I expected Interzone to announce they were setting up a studio in Perth, with assistance from the DOIR...

...but the Minister didn't even turn up! Hmmph. No explanation was given, other than "I'm sorry, we can't make the announcement we expected to" from Interzone CEO Robert J. Spencer. Weird.

This afternoon, I received a message in my inbox asking me to sign this online petition. It seems like someone inside DOIR changed their mind about the deal they were offering Interzone, which is really strange, as I believe that DOIR hosted and organized (read: financed) the function.

At least that explains the warm beer. :-).

Friday, December 15, 2006

Wednesday, December 13, 2006

Help, I'm addicted!

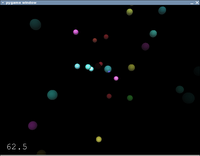

Phil Hassey (pygame hacker) has just released his first commercial title, Galcon. It's highly addictive, and I definitely recommend it.

It is a fast paced multiplayer strategy game, with very simple game mechanics which you can pick up in less than a minute. It's simplicity and enormous potential for complex strategy make it a very compelling game. I've been absorbed with it for too many hours over the last 2 days.

Nice work Phil.

It is a fast paced multiplayer strategy game, with very simple game mechanics which you can pick up in less than a minute. It's simplicity and enormous potential for complex strategy make it a very compelling game. I've been absorbed with it for too many hours over the last 2 days.

Nice work Phil.

Tuesday, December 05, 2006

Nullarbor Game Competition

I met with some fellow game developers last Friday. Amongst other things, we had a chat about the upcoming Nullarbor Game Competition being held at the GO3 Electronic Entertainment Expo in Perth, West Australia. Last year, my Krool Joolz entry game second. This year, I hope to do better :-)

GO3 is the sister event of the Tokyo Game Show. Apparently, _MTV Japan_ are covering GO3 and Nullabor. And did I mention Richard Garriott is coming?* Wow. My entry had better be good, the whole world is watching. And maybe even Richard Garriott. :-)

I've heard and read a lot of good things about a game development environment called Unity 3D. I'm seriously considering using it, but that means a costly dev license and a new mac. Perhaps I could find a local corporate sponsor... hmmm.

The alternative is either to grow my own engine again, or try an Open Source engine. Unfortunately, because so much brain time is involved in learning a new engine API, I really need to pick the right engine first... and I don't have much time, Nullabor is 4 months away.

* This is an unconfirmed rumour. There are however, many other famous names attending.

GO3 is the sister event of the Tokyo Game Show. Apparently, _MTV Japan_ are covering GO3 and Nullabor. And did I mention Richard Garriott is coming?* Wow. My entry had better be good, the whole world is watching. And maybe even Richard Garriott. :-)

I've heard and read a lot of good things about a game development environment called Unity 3D. I'm seriously considering using it, but that means a costly dev license and a new mac. Perhaps I could find a local corporate sponsor... hmmm.

The alternative is either to grow my own engine again, or try an Open Source engine. Unfortunately, because so much brain time is involved in learning a new engine API, I really need to pick the right engine first... and I don't have much time, Nullabor is 4 months away.

* This is an unconfirmed rumour. There are however, many other famous names attending.

Tuesday, November 07, 2006

What's over the horizon?

Last week, I came to the realisation that my game programming ambitions will take far too long to be realised while I remained a salaried, 40 hour per week employee.

So, I've decided to take the plunge and work on a contract basis, leaving at least 1-2 work days available for other opportunities. I've already started networking, and have met several interesting characters who might be interested in working with me on game related projects. I have about 3 weeks left in my current permanent role, and after that will be seeking short term contract work to fill the gaps. I'm a bit nervous about the risks I'm taking, but I believe that no progress can occur without some measure of risk.

So, I've decided to take the plunge and work on a contract basis, leaving at least 1-2 work days available for other opportunities. I've already started networking, and have met several interesting characters who might be interested in working with me on game related projects. I have about 3 weeks left in my current permanent role, and after that will be seeking short term contract work to fill the gaps. I'm a bit nervous about the risks I'm taking, but I believe that no progress can occur without some measure of risk.

Monday, October 23, 2006

Python at Perth IGDA meeting

I've just got back from an networking session organised by the IGDA chapter in Perth, Western Australia (that's my home city). For more information, read Nick Lowe's take on the event.

I caught up with some like-minded individuals, and some game producers who are looking to start up a dev shop in Perth or Melbourne. The interesting thing is... they are looking for Python programmers!

In fact, Python dominated a lot of the conversation. People are really waking up to the productivity gains a high level language like Python can provide. I've been asked to provide a demonstration of 2 or 3 games I've worked on (tommorow, at a local University), and the technology behind them. Should be fun!

I caught up with some like-minded individuals, and some game producers who are looking to start up a dev shop in Perth or Melbourne. The interesting thing is... they are looking for Python programmers!

In fact, Python dominated a lot of the conversation. People are really waking up to the productivity gains a high level language like Python can provide. I've been asked to provide a demonstration of 2 or 3 games I've worked on (tommorow, at a local University), and the technology behind them. Should be fun!

Thursday, October 19, 2006

Rubbery, bouncy sprites are my next big thing.

QGL is limited.

I'm going to focus on a new idea I've had, which will let me create morphable, bouncy sprites. The inspiration comes from Gish, and the wobbly windows I've been throwing around the screen in Compiz/XGL.

If I map a texture onto a grid, instead of a single quad, I will be able to morph the texture by morphing the vertexes of the grid. Simple huh? I could morph the grid vertexes by interpolation between key frames, or by algorithm (think of a sine wave ripple effect).

I could even tie the vertexes together using verlet integration and a constraint solver, which would let me create bouncy, rubbery sprites which can morph in response to collisions. This requires getting my head around some new (to me) mathematics, which will take some time.

Stay tuned.

- It has no shadows.

- It has a slow culling algorithm.

- There is no animation support for 3D models.

I'm going to focus on a new idea I've had, which will let me create morphable, bouncy sprites. The inspiration comes from Gish, and the wobbly windows I've been throwing around the screen in Compiz/XGL.

If I map a texture onto a grid, instead of a single quad, I will be able to morph the texture by morphing the vertexes of the grid. Simple huh? I could morph the grid vertexes by interpolation between key frames, or by algorithm (think of a sine wave ripple effect).

I could even tie the vertexes together using verlet integration and a constraint solver, which would let me create bouncy, rubbery sprites which can morph in response to collisions. This requires getting my head around some new (to me) mathematics, which will take some time.

Stay tuned.

Monday, October 16, 2006

OpenGL-ctypes 3.0.0a4 works for me.

I've managed to install OpenGL ctypes 3.0.0a4, and have run the QGL tests.

Everything worked as expected, except for the lighting tests. I suspect that my lighting code is at fault, as I've had intermittent problems with the lighting code on ATI cards. I ran the tests under Xgl which may have effected things which I don't know about...

Everything worked as expected, except for the lighting tests. I suspect that my lighting code is at fault, as I've had intermittent problems with the lighting code on ATI cards. I ran the tests under Xgl which may have effected things which I don't know about...

Wednesday, October 04, 2006

Back into the dark ages...

I've been experimenting with the OpenGL shadow extension. It can produce very nice shadows, and it is quite easy to implement.

I want to make it usable from QGL, which presents a problem. Speed.

I have to make multiple passes through my scenegraph to render a depth map from the POV of each light in the scene. Each extra pass QGL makes over the scenegraph is going to consume frames per second.

I've previously written a render visitor in Pyrex, which only provided a minimal speed increase. I think this is because the double dispatch pattern I'm using requires a couple of python function calls for each node that is rendered.

It seems that the only way to get around this is to move the entire scenegraph data structure into a C extension, providing an API to allocate and modify C structures from my Python code. This effectively means ditching the current QGL and writing a C render engine, which is not something I intended to do.

Sunday, September 24, 2006

Shadows in QGL

Shadows help ground models in a scene and assist with depth perception. QGL really needs shadows.

Current best practice revolves around using the stencil buffer and projecting a flattened model onto surrounding geometry, creating the illusion of a shadow. The technique often referred to as a shadow volume algorithm.

A different algorithm, with different tradeoffs, is the shadow mapping algorithm. There is even hardware support available for implementing this technique.

I'd like to implement shadow mapping in QGL, as it is likely to be much faster than a shadow volume technique. To do this, I need to get a hold of the ARB_shadow extension, which PyOpenGL doesn't (yet?) provide. I'll probably have to use ctypes, and talk to the libGL dll directly.

Current best practice revolves around using the stencil buffer and projecting a flattened model onto surrounding geometry, creating the illusion of a shadow. The technique often referred to as a shadow volume algorithm.

A different algorithm, with different tradeoffs, is the shadow mapping algorithm. There is even hardware support available for implementing this technique.

I'd like to implement shadow mapping in QGL, as it is likely to be much faster than a shadow volume technique. To do this, I need to get a hold of the ARB_shadow extension, which PyOpenGL doesn't (yet?) provide. I'll probably have to use ctypes, and talk to the libGL dll directly.

Thursday, September 21, 2006

QGL-9 Released, with a Shiny New Particle Emitter!

I've just released a new version of QGL. New features are:

* A ParticleEmitter lead node.

* All leaf nodes moved into qgl.scene.state namespace.

The particle emitter leaf node generates a bunch of particles within an arc and with a velocity range. You then call the .tick method of the ParticleEmitter, which animates the particles. It uses numpy, so it's reasonably fast. It could use a few more features, but I'll need to use it for a while to find out what they might be. :-)

* A ParticleEmitter lead node.

* All leaf nodes moved into qgl.scene.state namespace.

The particle emitter leaf node generates a bunch of particles within an arc and with a velocity range. You then call the .tick method of the ParticleEmitter, which animates the particles. It uses numpy, so it's reasonably fast. It could use a few more features, but I'll need to use it for a while to find out what they might be. :-)

Wednesday, September 20, 2006

Concurrency without Threads

I'm still investigating options for implementing concurrent solutions in game-like applications. I haven't found many high level languages which provide options for using real threads. Ruby, Chicken-Sceme, Bigloo Scheme, Ruby, Erlang. None of these provide real threading.

I think it's time I stopped thinking about threads, and started thinking about concurrency.

One idea I'm considering is the use of a Linda style system which is designed to provide a tuple-space on a local machine only. For performance reasons, access to the tuple-space would need to be implemented via some kind of shared memory facility.

The tuple-space approach could be ideal for game development processes. For example, the main process could push scene information into the tuple-space, where it is read by a second process which performs culling operations on the scene, and pushes the viewable set of scene nodes back into the tuple space. Meanwhile, the main process could be running the physics simulation, processing sound etc. This approach would require writing a separate program which works cooperatively and concurrently with the main program. No forking, and no threading.

I think it's time I stopped thinking about threads, and started thinking about concurrency.

One idea I'm considering is the use of a Linda style system which is designed to provide a tuple-space on a local machine only. For performance reasons, access to the tuple-space would need to be implemented via some kind of shared memory facility.

The tuple-space approach could be ideal for game development processes. For example, the main process could push scene information into the tuple-space, where it is read by a second process which performs culling operations on the scene, and pushes the viewable set of scene nodes back into the tuple space. Meanwhile, the main process could be running the physics simulation, processing sound etc. This approach would require writing a separate program which works cooperatively and concurrently with the main program. No forking, and no threading.

Friday, September 15, 2006

If I had a Concurrent Python, what would I do with it?

Something like this:

CORE_COUNT = 2 #This is how many CPUs or cores we have to play with.

def xmap(fn, seq):

"""

Run a function over a sequence, and return the results.

The workload is split over multiple threads.

"""

class Mapper(Thread):

def __init__(self, fn, seq):

Thread.__init__(self, target=self.map, args=(fn,seq))

self.start()

def map(self, fn, seq):

self.results = map(fn, seq)

newseq = []

n = len(seq) / CORE_COUNT

r = len(seq) % CORE_COUNT

b,e = 0, n + min(1, r)

for i in xrange(CORE_COUNT):

newseq.append(seq[b:e])

r = max(0, r-1)

b,e = e, e + n + min(1, r)

results = []

for thread in [Mapper(fn,s) for s in newseq]:

thread.join()

results.extend(thread.results)

return results

This function takes care of starting, joining and collecting results from threads. It lets the programmer map a function over a sequence, and have the work done in parallel. Of course, this won't work in CPython, but it might prove useful in PyPy, or IronPython. Parallel processing in this style would be very useful for applications that work with large data sets, ie Games!

A game could be written to take advantage of the xmap function whenever possible. It would only make sense to use it when iterating over large data sets or using long running functions.

Wednesday, September 13, 2006

Concurrent Python on Win32? No Such Animal.

I want a cross platform, concurrent Python. It seems, I can't have it. I really am astounded by the fact that there is no way I can implement concurrent programs in Python on Win32, without resorting to socket communications between two different programs. A heinous kludge, from my perspective.

The second core on my new notebook will not be available for my Python games. Python used to be my secret weapon, when it came to game development.

I don't want to get left behind in the coming multi-core revolution. Maybe it's time to move on.

The second core on my new notebook will not be available for my Python games. Python used to be my secret weapon, when it came to game development.

I don't want to get left behind in the coming multi-core revolution. Maybe it's time to move on.

Monday, September 11, 2006

QGL-8 Available: Shaders, Materials and Much More...

I've just uploaded QGL-8 to the python cheeseshop. This version contains the new shader funtionality, plus a very useful Static Node, which compiles all it's children into a single display list. The attached screenshot shows the new Material leaf class being used in a neat particle demo (contributed).

Wednesday, September 06, 2006

My Pyweek effort is over.

Unfortunately, Real Life issues have torpedoed my Pyweek efforts. I just cannot focus on game development right now. Too many disruptive, disturbing thoughts running around my head. Owell.

Perhaps next time.

Perhaps next time.

Wednesday, August 30, 2006

CG in the Multi-core Style

I've just read an two interesting articles re: real time ray tracing.

This is exciting stuff.

In the mid 90's, Ken Silverman wrote a bleeding edge 2.5D ray casting engine which was used to create Duke Nukem 3D. This was however, quickly eclipsed by Carmack's Quake engine, which was based on triangle rasterization. Ten years on, not a whole lot has changed. Things just got faster, and hotter, and bigger. Loking forward however, there is some exciting new graphics tech coming our way. With a quad core multi GHz CPU, real time ray tracing might soon become a reality.

This raises everyone's favorite Python whipping boy, the GIL, in another context.

The GIL prevents Python from scaling (using threads) across multiple processors. Hopefully someone will solve this problem soon. I want to use multiple threads on my multi-core chip in Python, but, at the moment, I cannot. Sure, I can fork a process and do something clever with that, but on Win32, this is rarely worth the effort, and generally not very useful for game programming. As more users get multi-core chips, Python won't scale as naturally as other threaded languages.

Will anyone step up and take on this challenge? I hope so.

This is exciting stuff.

In the mid 90's, Ken Silverman wrote a bleeding edge 2.5D ray casting engine which was used to create Duke Nukem 3D. This was however, quickly eclipsed by Carmack's Quake engine, which was based on triangle rasterization. Ten years on, not a whole lot has changed. Things just got faster, and hotter, and bigger. Loking forward however, there is some exciting new graphics tech coming our way. With a quad core multi GHz CPU, real time ray tracing might soon become a reality.

This raises everyone's favorite Python whipping boy, the GIL, in another context.

The GIL prevents Python from scaling (using threads) across multiple processors. Hopefully someone will solve this problem soon. I want to use multiple threads on my multi-core chip in Python, but, at the moment, I cannot. Sure, I can fork a process and do something clever with that, but on Win32, this is rarely worth the effort, and generally not very useful for game programming. As more users get multi-core chips, Python won't scale as naturally as other threaded languages.

Will anyone step up and take on this challenge? I hope so.

Friday, August 25, 2006

QGL / EC Fly-by Demo

I've spent the afternoon building a spacecraft fly-by demo for Entity Crisis. I've found that it is actually very hard to create a nice visual. Just because something is 3D doesn't magically make it a great looking scene. A lot of work has to go into getting the lighting right, and the material properties. The majority of effort goes into texturing. A boring model comes alive with the right texture. Fortunately I know a few good texture artists. :-)

I've also realized that a scenegraph needs nodes which can do things like enable and disable depth testing, blending etc. Not everything can be abstracted away into high level classes, the programmer often needs access to lower level functions.

The code and data is available in svn/qgl/trunk/demos/ec_intro.

Wednesday, August 23, 2006

That sweet smell of Py

After promising myself for years not to get inloved with various web community fads, i.e. Blogging, MySpace etc. I've resided myself to fact that it is indeed the future of online social commentary and exploration.

What better way to start than introducing the third return of our small band of game developers in the latest PyWeek competition. It's back! For those who aren't familiar with it we urge to check out the competition, view some previous years entries, and perhaps if you're feeling ballsy - enter yourself!

This will be our third effort after some pretty decent success in previous attempts. More news on the competition soon.

Game onward sir!

What better way to start than introducing the third return of our small band of game developers in the latest PyWeek competition. It's back! For those who aren't familiar with it we urge to check out the competition, view some previous years entries, and perhaps if you're feeling ballsy - enter yourself!

This will be our third effort after some pretty decent success in previous attempts. More news on the competition soon.

Game onward sir!

Monday, August 21, 2006

QGL has shaders

Thanks to some enlightenment from Alex Holkner (GLSLExample on the pygame cookbook), QGL now has shader support that actually works. The attached screen shot shows a Toon Shader in action.

A QGL shader has an update method, which passes named arguments to the shader program. Currently, the only supported data type for update operations is the humble float. Vector and matrix data types are coming soon.

Thursday, August 17, 2006

ATI Lighting is Bugged

I develop on a notebook which has an ATI X600 mobility video card. I'm using the latest ATI Linux drivers.

Every now and then, my lighting tests only show the ambient light value (usually (0.2,0.2,0.2,1.0) and ignore the diffuse and specular light parameters. The effect of this is that the entire scene is very dark, with no light effects at all. So, how do I fix this?

By tapping something on the keyboard.

Or clicking in the window.

This usually fixes the problem, until I click or tap again. I can do this a few times before the scene stays lit, or stays dark. I'm blaming the Linux video drivers, as this problem does not happen in windows.

Sigh.

My next notebook will use Nvidia.

Every now and then, my lighting tests only show the ambient light value (usually (0.2,0.2,0.2,1.0) and ignore the diffuse and specular light parameters. The effect of this is that the entire scene is very dark, with no light effects at all. So, how do I fix this?

By tapping something on the keyboard.

Or clicking in the window.

This usually fixes the problem, until I click or tap again. I can do this a few times before the scene stays lit, or stays dark. I'm blaming the Linux video drivers, as this problem does not happen in windows.

Sigh.

My next notebook will use Nvidia.

Tuesday, August 15, 2006

Shaders aren't that hard

I've been playing with glewpy, and have been able to create, compile and use some simple OpenGL vertex and fragment shaders.

I'm thinking of adding shader type leaves to QGL. Not everyone has shader capable hardware, so the leaves need to be optional, which means they can't exist in the qgl.scene namespace, they will need to be explicitly imported by the programmer.

If shaders are optional, then the render visitor shouldn't need to know about the OpenGL shader implementation. This presents a problem. How do I enable custom leaf execution in the Render and other visitor classes? In it's current state, it is not obvious, as the Render visitor uses a big if/elif block to dispatch leaf drawing operations.

Hmmm.

I'm thinking of adding shader type leaves to QGL. Not everyone has shader capable hardware, so the leaves need to be optional, which means they can't exist in the qgl.scene namespace, they will need to be explicitly imported by the programmer.

If shaders are optional, then the render visitor shouldn't need to know about the OpenGL shader implementation. This presents a problem. How do I enable custom leaf execution in the Render and other visitor classes? In it's current state, it is not obvious, as the Render visitor uses a big if/elif block to dispatch leaf drawing operations.

Hmmm.

Subscribe to:

Posts (Atom)

Popular Posts

-

These are the robots I've been working on for the last 12 months. They each weigh about 11 tonnes and have a 17 meter reach. The control...

-

This hard-to-see screenshot is a Generic Node Graph Editing framework I'm building. I'm hoping it can be used for any kind of node...

-

So, you've created a car prefab using WheelCollider components, and now you can apply a motorTorque to make the whole thing move along. ...

-

MiddleMan: A Pub/Sub and Request/Response server in Go. This is my first Go project. It is a rewrite of an existing Python server, based o...

-

Why would I ask that question? Python 3 has been available for some time now, yet uptake is slow. There aren't a whole lot of packages i...

-

It is about 8 degrees C this morning. So cold, especially when last week we had high twenties. To help solve the problem, a friend suggeste...

-

After my last post, I decided to benchmark the scaling properties of Stackless, Kamaelia, Fibra using the same hackysack algorithm. Left axi...

-

I'm now using bzr instead of svn. I'm pushing my repositories to: http://exactlysimilar.org/bzr/ I'm also auto publishing docume...

-

Possibly slightly more correct lighting. The rim light is now only applied in the direction of the sun, rather than being purely based on vi...

-

I've just read a newspaper article (courtesy of Kranzky ) from WA Business News documenting the malfeasance, gross negligence and misc...